Streamlining Real-Time Web Communication with Pushpin

Introduction

As user experience takes center stage in today's digital landscape, real-time functionality in web applications is no longer a luxury but a necessity. It offers instant updates and dynamic interactions that users have come to expect. WebSockets, SSE (Server-Sent Events), and long-polling are the foundational technologies powering real-time communication between clients and servers, each bringing its distinct advantages and challenges. This article delves into these technologies, introducing Pushpin as a streamlined solution for implementing the real-time web.

Understand and Compare WebSockets, SSE, and Long-Polling

WebSockets

WebSockets facilitate a persistent, full-duplex communication channel over a single, long-lived connection, enabling servers to push updates to clients instantly upon data changes. Ideal for chat applications, online gaming, and live sports updates, WebSockets efficiently cater to high-frequency interaction scenarios.

Server-Sent Events (SSE)

SSE, akin to WebSockets, enables servers to send updates to clients. However, SSE maintains a unidirectional flow of data (server to client) over a single HTTP connection. This technology shines in scenarios like feed updates and news tickers where the client does not require to communicate back frequently.

Long-Polling

Long-polling, an HTTP-based solution, involves clients sending a request to the server, which remains open until the server has data to send back, hence mimicking a real-time connection. Commonly utilized in applications where the data update frequency is low and unpredictable, it offers a simpler, HTTP/1.1-friendly approach to real-time.

Navigating through Inherent Complexities

Implementing and managing WebSockets, SSE, and long-polling directly through an application server presents several challenges:

-

Connection Management: Maintaining persistent connections, ensuring optimal utilization of resources, and managing connection lifecycles is resource-intensive.

-

Scalability: Scaling applications while ensuring that real-time updates are broadcasted to all connected clients becomes intricate.

-

Failover Management: Ensuring that the system gracefully recovers from failures while maintaining data integrity and connection stability requires meticulous handling.

-

Cross-Compatibility: Ensuring that the real-time functionality adheres to varied client capabilities and network conditions demands additional logic and handling.

Introduction to GRIP

The Generic Realtime Intermediary Protocol (GRIP) is a specification tailored for managing real-time data transport on the web. Built upon the foundation of HTTP, GRIP is designed to add a layer of abstraction over various real-time communication methods such as WebSockets, Server-Sent Events (SSE), and long-polling.

When we talk about real-time communication, especially in a scalable and distributed system, we often encounter issues such as load balancing, maintaining persistent connections, broadcasting to multiple clients, and handling reconnections. GRIP elegantly addresses these issues by using intermediaries, like Pushpin, to maintain persistent client connections and allowing the main server application to remain stateless.

How does GRIP work?

GRIP-enabled intermediaries stand between the client and the application server. They understand the GRIP protocol and can maintain persistent connections with clients, manage subscriptions, and broadcast messages. The application server communicates with these intermediaries using regular HTTP, allowing it to remain stateless and unaware of the connected clients.

Diagram depicting the role of GRIP in managing real-time communication. more info

Understanding the Grip-Hold Directive

Among the various headers that GRIP introduces, Grip-Hold holds a prominent place. It essentially instructs the GRIP proxy on how to handle an incoming client connection.

There are two primary values for Grip-Hold:

-

stream- This value is used when the intention is to set up a streaming response. The GRIP proxy will keep the connection open indefinitely, allowing for data to be pushed from the server to the client in real-time. This is particularly useful for Server-Sent Events (SSE) or any other streaming mechanism where data is sent in a one-way direction from server to client. -

response- This value is suitable for long-polling scenarios. The GRIP proxy holds the client connection open until a response is ready or a specified timeout is reached. Clients essentially "wait" for the response, and once the data is available, it's sent through the open connection. After sending the data or upon timeout, the connection is closed.

In the context of Pushpin, using Grip-Hold with a value of "response" is like telling Pushpin: "Hold onto this request until I have something to send or until it's time to time out." Conversely, setting it to "stream" implies an ongoing stream of data from server to client.

Managing Subscriptions using Grip-Channel Header

Another important header introduced by the GRIP protocol is Grip-Channel. It essentially denotes the "channel" or "topic" to which a client is subscribing. In pub/sub (publish/subscribe) systems, channels or topics are common mechanisms to categorize and filter messages. Clients express interest in a particular topic, and servers push data categorized under that topic.

For instance, in a chat application, each room could be represented by a unique channel. Users connected to a particular room would subscribe to its corresponding channel, ensuring they only receive messages pertinent to that room.

In Pushpin's context, when a client wants to listen to real-time updates, it specifies the channel it's interested in using the Grip-Channel header. Pushpin then listens for messages published to that channel and forwards them to the subscribed client.

For instance, if a client is interested in receiving live score updates from a soccer match between Team A and Team B, it might set the Grip-Channel header value to live_scores_teamA_vs_teamB. When the server publishes new scores to this channel, Pushpin ensures that each client subscribed to this channel receives the updates.

GET /live/scores HTTP/1.1

Host: example.com

Accept: application/json

Grip-Channel: live_scores_teamA_vs_teamB

On the server-side, when an update for the score arrives, it doesn't need to know who all are the clients or how many clients are currently subscribed. It simply publishes the update to the live_scores_teamA_vs_teamB channel. Pushpin takes care of delivering this message to all the appropriate clients. This level of abstraction simplifies the server's role, ensuring it remains stateless and scalable.

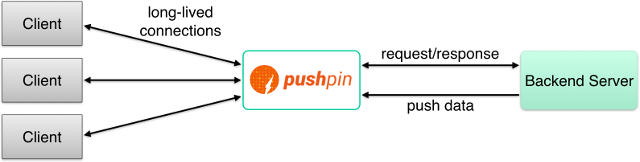

Introducing Pushpin

Pushpin, an open-source reverse proxy server, emerges as a robust solution, abstracting the complexities of handling WebSockets, SSE, and long-polling. Pushpin retains persistent connections with clients, while your server remains stateless, communicating with Pushpin as needed via simple HTTP and ZeroMQ interfaces.

Simplifying Real-Time Web with Pushpin

Pushpin addresses the challenges associated with direct real-time communication management in the following ways:

-

Decouples Connection Management: By handling client connections and managing the persistence, Pushpin allows your server to remain stateless, focusing on business logic.

-

Simplifies Scalability: Pushpin can replicate and distribute real-time updates across multiple instances, ensuring all clients receive updates even in scaled, load-balanced architectures.

-

Facilitates Failover: As Pushpin maintains client connections, it can hold or re-establish connections in failure scenarios, ensuring consistent communication.

-

Ensures Compatibility: With built-in support for WebSockets, SSE, and HTTP streaming/long-polling, Pushpin ensures delivery of real-time updates across varied network conditions and client capabilities.

For example, in a chat application, rather than managing WebSocket connections directly, the server simply instructs Pushpin to broadcast messages to the appropriate clients upon receiving new messages, keeping the logic streamlined and focused on core functionality.

Pushpin in Action

Let's explore Pushpin in action by building a simple chat application. The application consists of a server, which handles the business logic, and Pushpin, which manages the real-time communication and a Frontend client.

I've created a repository which includes the source code for the server, Pushpin configuration, and the Frontend client. You can find the repository here

Follow the steps on the readme to run the backend server and Pushpin. Once you have the backend server and Pushpin running, you can run the Frontend clients.

docker-compose up --build -d

Notice that the docker-compose file includes a route for the backend server. This route instructs Pushpin to forward all matching requests to the backend server.

Let's take a closer look at the routes file.

This means that all traffic reaching the Pushpin server should be forwarded to the backend server running on host host.docker.internal. The backend server is running on port 8000.

Now let's focus on the backend server. I've used golang for the sample backend server. The backend server exposes a REST endpoint to publish messages to a channel. It also has 3 endpoints for SSE, long-polling, and Websocket clients to subscribe to the channel.

I've setup few routes and have started a basic HTTP server.

/sse- Handles Server-Sent Events/longpoll- Handles long-polling/websocket- Handles Websocket connections/publish- Handles publishing messages to the channel

Each route is handled by a handlePreflight function which would handle the prefight request and handles the actual request by calling the handleRequest function based on the route.

Now let's take a look at the handleRequestSSE, handleRequestLongPolling functions.

Both handlers are similar and does few things.

- Sets the Grip-Channel header to the channel name provided in the request header

- Sets the Grip-Hold header to either

streamorresponsebased on the request type - Sets cors and optionally checks for authorization token as required.

The server is upgrading the initial HTTP connection to a WebSocket connection using the Upgrade function provided by the Go websocket package's Upgrader. The crucial part here is the third parameter, where we are setting the Sec-WebSocket-Extensions header to specify the GRIP extension.

- grip: This signifies that Pushpin should treat this WebSocket connection according to the GRIP protocol.

- message-prefix: This is an optional parameter allowing you to specify a prefix for messages. In the provided code, it's set to an empty string, meaning no prefix.

When this handshake reaches Pushpin, the GRIP header informs Pushpin to take control of this WebSocket connection, allowing Pushpin to manage subscriptions, message delivery, and any other GRIP-related features over this WebSocket connection. Once Pushpin recognizes this, it acts as an intermediary for the WebSocket, listening for published messages that need to be broadcasted to the client.

Finally, let's take a look at the publish function.

The publish function takes the message from the request body and publishes it to the channel specified in the request header.

Here I'm using go-gripcontrol and go-pubcontrol libraries to publish messages to the channel.

pub := gripcontrol.NewGripPubControl([]map[string]interface{}{

{

"control_uri": "http://localhost:5561",

},

{

"control_uri": "http://localhost:5560",

},

})

In this section, we're initializing the GripPubControl object, which is essentially a publisher interface that can send messages to Pushpin. The control_uri specifies the addresses of the local Pushpin instances. You can think of this as telling the application where to send messages to ensure they are broadcasted in real-time to clients. We then format the message for SSE, long-polling, and Websocket clients and publish the message to the channel.

I've also created 2 Frontend clients, one using React JS and the other using React Native. Both clients are using the same backend server and Pushpin instance to communicate with the server.

Demo - Frontend Client Application - React JS

git clone https://github.com/HaswinVidanage/go-pushpin-demo.git

cd app/streaming-fe-app

npm install

npm start

Frontend client using SSE, WS and Longpolling to publish and subscribe to pushpin server.Source: https://github.com/HaswinVidanage/go-pushpin-demo/tree/main/app/streaming-fe-app

Demo - Mobile App, Client Application - React Native

git clone https://github.com/HaswinVidanage/go-pushpin-demo.git

cd mobile/streamapp

npm install

npm start

Mobile client using SSE, WS and Long polling to publish and subscribe to Pushpin server.Source: https://github.com/HaswinVidanage/go-pushpin-demo/tree/main/mobile/streamapp

Making Pushpin Scalable for Production Use

As you might have observed in my docker-compose file, I've specified two Pushpin servers and hardcoded them into the backend server. We then fan-out our message to the hardcoded Pushpin servers during publishing. This is not ideal for production use.

In a production environment, you would typically deploy multiple Pushpin servers to scale the solution horizontally. To accommodate this setup, there's a need for dynamic server discovery. In the context of my use case, I employ a headless service in Kubernetes for the seamless discovery of Pushpin servers.

Now on the backend server use the service discovery mechanism to discover the Pushpin servers.

import "github.com/miekg/dns"

...

// introduce a new function to fetch the Pushpin IPs from the headless service

func getServicePodIPs(serviceName, namespace string) ([]string, error) {

m := new(dns.Msg)

m.SetQuestion(dns.Fqdn(fmt.Sprintf("%s.%s.svc.cluster.local", serviceName, namespace)), dns.TypeA)

in, err := dns.Exchange(m, "kube-dns.kube-system.svc.cluster.local:53")

if err != nil {

return nil, err

}

var ips []string

for _, record := range in.Answer {

if t, ok := record.(*dns.A); ok {

ips = append(ips, t.A.String())

}

}

return ips, nil

}

// now use the function to fetch the Pushpin IPs and alter the handlePublish function

As for vertical scalability, this is what's mentioned on their readme.

Pushpin has been tested with up to 1 million concurrent connections running on a single DigitalOcean droplet with 8 CPU cores. In practice, you may want to plan for fewer connections per instance, depending on your throughput. The new connection accept rate is about 800/sec (though this also depends on the speed of your backend), and the message throughput is about 8,000/sec. The important thing is that Pushpin is horizontally scalable which is effectively limitless.

Conclusion

Implementing real-time web functionality is crucial for improving user experience, but it comes with its complexities and management challenges. Pushpin addresses these challenges, simplifying real-time communication and allowing developers to concentrate on core business logic.

Also note that this article is not a comprehensive guide to Pushpin. I've only covered the basics of Pushpin and GRIP. I would encourage you to explore the Pushpin documentation and try out the examples to get a better understanding of Pushpin. Furthermore Pushpin is also offered as a service by Fanout. You can check out their documentation to learn more about their service.