Transforming Monolith to Microservices: Leveraging CDC, Debezium, and Kafka in Microservice Migration

Introduction

In this guide, we're going to embark on an exploratory journey, progressively migrating from a monolithic architecture to a microservice-oriented model using Debezium, Kafka, and event-driven patterns.

This approach stems from my hands-on experience with my current project at NE Digital. Over the past few years, we have been maintaining and progressively enhancing a significant monolithic application. Built with Golang and backed by a MySQL database, this application thrives within Google Kubernetes Engine (GKE) and has been architected to scale horizontally.

However, despite implementing numerous optimizations, it became increasingly evident that our monolith was reaching its scalability limits. The surge in traffic and growing complexity of the application posed significant challenges. Additionally, maintaining the application became increasingly difficult as the codebase grew more cumbersome to navigate and comprehend. Individual squads encountered challenges in managing the system without inadvertently introducing breaking changes to one another.

Given the challenges of managing a large-scale application and a dynamic team, along with the need to sustain the rhythm of feature development to meet our ambitious product roadmap, a sudden, wholesale ("big bang") migration was off the table. The question then presents itself: How do we facilitate this transition?

The answer lies in incremental adaptation. Our strategy is to simultaneously nurture our monolith and cultivate our budding microservices, all while ensuring we avoid introducing additional traffic to the already strained resources of our monolithic system.

This post contains bits of an arbitrary example unrelated to the project to explain the design principals behind the migration. The example is a simple e-commerce application that allows users to purchase products from vendors and sends out a notifications to the users.

The Core Idea: Decoupling the Monolith

A cornerstone in our migration strategy is ensuring that our new microservices, as they come to life, do not impose an additional burden on the monolith's resources. To realize this objective, we incorporate a crucial technique: decoupling the new services from the monolithic database.

Enter Debezium, an open-source platform specializing in Change Data Capture (CDC). Its function is to tap into our MySQL database, monitoring the minute changes occurring within its tables. Every operation - be it an insert, update, or delete in the monitored database tables - propels Debezium into action. It captures the event, morphs it into an event object, and shuttles it off to a Kafka topic.

Here, Kafka plays a vital role as an event streaming conduit. It securely holds these changes as a stream of records, exhibiting durability and fault-tolerance. This arrangement empowers our microservices to consume these events at their own leisure, free from any direct strain on the monolith's database.

{

"payload": {

"after": {

"id": 100001,

"firstname": "John",

"lastname": "Doe",

"email": "johndoe@mail.com",

"active": true,

"created_at": "2023-06-29T22:31:46Z",

"updated_at": "2023-06-29T22:31:46Z"

}

}

}

We designate a specific microservice for each core table in the monolithic database. These 'Facade Services' or 'Replica Services' listen to the changes streamed by Debezium to Kafka. Their mission is twofold: making sense of these changes and maintaining a synchronized state with the monolith's database in their local data stores.

Take, for example, our User service. It tunes in to the changes in the 'User' table in the monolithic database. Any action - a new user joining, an existing user updating their information, or a user leaving - triggers Debezium to capture these changes and dispatch them to Kafka. The User service, subscribed to the respective Kafka topic, processes these changes and updates its local data store. This dynamic also provides an opportunity to refactor and tailor the schema of a new user microservice to better suit the microservice architecture.

Using this approach allows us to refactor the schema of a core table such as user in early stages of the migration and even to change the database from mysql to an alternative we fit for purpose. This is a huge advantage as it allows us to avoid the need to refactor the monolith to accommodate the new schema or database.

Thus, the microservices stay updated with the latest data from the monolith without directly querying its database, keeping the monolith's resources unfettered. This elegant arrangement paves the way for a seamless transition from our monolith to a constellation of finely-tuned, purposeful microservices.

Harnessing Change Data Capture with Debezium

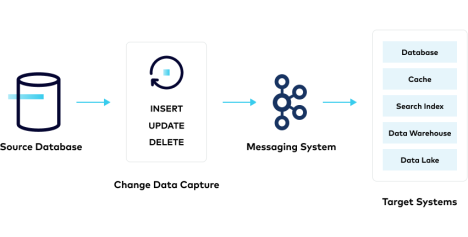

Change Data Capture (CDC) is a design pattern that enables capturing changes made in a database and delivering them in a well-defined format to downstream consumers. CDC can track and respond to all insert, update, and delete operations that occur in a database, effectively capturing the evolving state of the data. The key advantage of CDC is that it allows other systems or services to react to changes in the database without needing to perform expensive queries or batch processing jobs.

Change Data Capture, Source - Confluent

Change Data Capture, Source - Confluent

In our setup, Debezium, an open-source distributed platform, plays the role of a CDC tool. Specifically, we employ the Debezium MySQL source connector, which monitors the MySQL binary log, also known as the binlog. The binlog is a log file that records changes made to the database in the form of 'events'. These events describe how the database's data has been modified, with an event being produced for each DML operation (insert, update, delete) performed on the database.

The Debezium MySQL source connector reads these events from the binlog, without making any 'select' queries on the database. This binlog-based approach is non-intrusive and imposes minimal load on the database, hence preserving the database resources. For each event, Debezium creates a change event record that includes the state of the affected row, both before and after the event. These change event records are then sent to Apache Kafka, forming a stream of records that reflect the ongoing changes in the database. Thus, Debezium enables our microservices to react to changes in the database in near-real-time, contributing significantly to our decoupling and data replication strategy.

Breaking Down Services: Facade and Main Services

Our migration strategy involves segregating the system into two distinct types of services - the "Facade Services" and the "Main Services". This bifurcation is vital for ensuring a clear separation of concerns and facilitating an efficient migration process.

Streamlining Migration Through Layered Classification

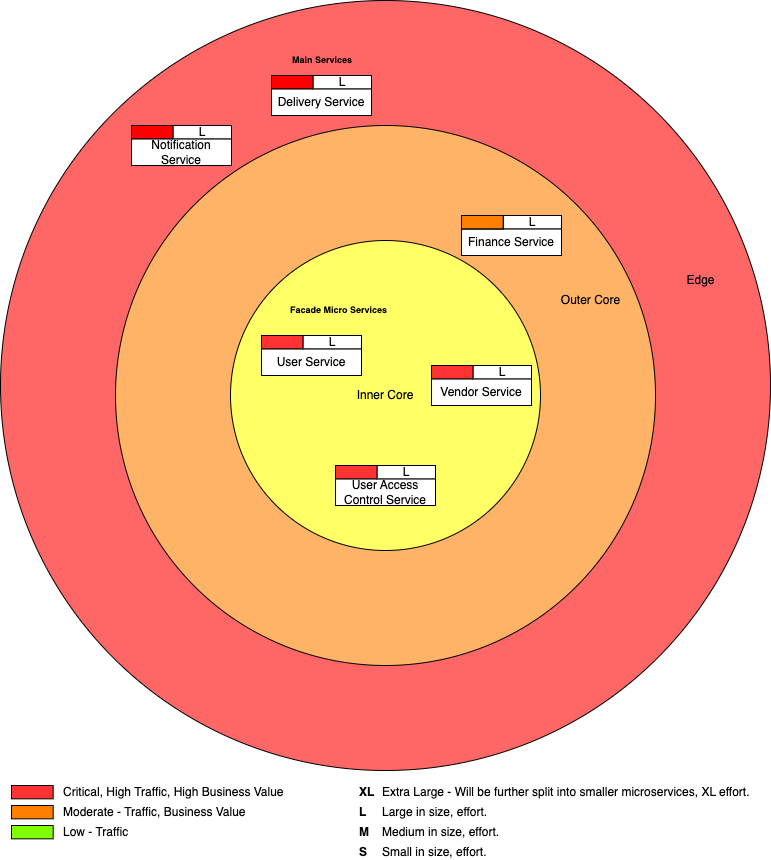

I like to visualize modules as if they are placed in the layers of the Earth's core: inner core, outer core, mantle, and crust—combined together as the edge to simplify. The deeper the layer, the more difficult it is to move out of the monolithic structure as there are other modules depending on them. So, it's vital for us to accurately classify these services to identify which ones stay at the core and create facade services for them.

Layered classification for Monolith to Microservices migration

Layered classification for Monolith to Microservices migration

The main services are then selected from the edge, followed by the outer core, leaving only the core in the monolithic structure, which is the last to be migrated. However, at this point, the core is already in the microservices as facade services. All we have to do is promote them to main services with the necessary changes required based on your plan for each service.

However, it's important to note that this approach may not necessarily be ideal for every use case. Feel free to adapt and customize it according to your specific needs and requirements.

Facade Services

The Facade Services act as simplified interfaces to the core tables present within the monolith's database. Their primary responsibility is to monitor the changes in their respective tables in the monolith's database, which are streamed via Debezium and Kafka, process these changes, and maintain an updated state in their local data stores. Although these services may be more complex in real life, with potential schema changes, for the purposes of this article, we'll consider their core job - to sync the monolith's core data in real-time and make it accessible to other microservices.

For instance, during the initial phase of our migration, we designate services such as the User service and the Vendor service as our Facade Services. Each of these services is associated with a core table from the monolith (i.e., the User service corresponds to the 'User' table, and the Vendor service corresponds to the 'Vendor' table). These services consume the change events specific to their associated table, enabling them to mirror the state of the data in the monolith's database.

In subsequent stages, these facades can be elevated to the status of main services, with or without additional modifications.

Main Services

While the Facade Services are busy maintaining synchronization with the monolith, the Main Services take on the responsibility of introducing and managing new functionalities that were not present in the monolithic application. These services might interact with one or more Facade Services to fetch the data they need to perform their functions.

For example, during the first phase of our migration, we introduce the Notification service and the Delivery service as our Main Services. The Notification service, for instance, may need to fetch user data from the User service (a Facade Service) to send personalized notifications. Similarly, the Delivery service might need to fetch vendor data from the Vendor service (another Facade Service) to create its delivery records.

This thoughtfully defined structure encourages a clean, organized transition towards a microservices architecture, where each service has a clear, single responsibility, significantly reducing complexity and enhancing maintainability.

An Incremental Approach: The Strangler Fig Pattern

The transition from a monolithic structure to a microservices architecture isn't an overnight transformation but a progressive evolution. To successfully execute this change, we harness the power of the Strangler Fig Pattern, a software development approach that allows for gradual, phased replacement of specific parts of the system with new services.

Named after the strangler fig tree, this pattern is inspired by the unique growth pattern of this species. Strangler figs are parasitic trees that germinate and grow on other trees. They extend their roots downward and envelop the host tree, eventually outliving and replacing it.

In the context of our application, the "host tree" is our monolithic system, and the "strangler fig" represents our new microservices. Initially, both the monolith and the new microservices coexist, with the microservices beginning to take over some responsibilities from the monolith. This is typically accomplished by redirecting some requests to the new services, while the remainder of the traffic still goes to the monolith.

Over time, as we continue to develop more microservices, each taking over a specific function from the monolith, they collectively start to form a significant part of our system's functionality. Eventually, the microservices "strangle" the monolith by completely taking over all its responsibilities. Once all functionality is handed over to the microservices and the monolith no longer serves any requests, it can be safely decommissioned, marking the completion of the migration.

This pattern allows for an orderly, controlled transition where the risk is mitigated as each part of the monolith is incrementally replaced. This incremental approach is key to a successful migration, as it minimizes disruption and allows for continual delivery of features throughout the transition process.

The Database Per Service Pattern: Enabling Decoupling and Autonomy

A fundamental element in our migration strategy is the 'Database per Service' pattern, recognized as a best practice within the microservices realm that advocates for each microservice to have its dedicated database. For our Facade Services, this pattern comes to fruition as each service maintains its local data store, mirroring the corresponding table from the monolith's database.

The changes in the monolith's database are captured by Debezium, streamed by Kafka, and consumed by the respective Facade Service, which processes the change and updates its local store. This ensures that each Facade Service independently maintains a consistent replica of its corresponding monolith table, without querying the monolith's database directly. The pattern is equally pivotal for our Main Services, as these services handle new functionalities, each managing their databases for any new data they generate or need. When a Main Service needs data from the monolith's database, it interacts with the appropriate Facade Service. This arrangement reinforces the decoupling of services, eliminating direct dependencies on the monolith's database. The benefits of this pattern are manifold:

- Decoupling: Each service having its own database means that the services are loosely coupled. Changes in one service's database schema do not impact any other service.

- Data Consistency: As each Facade Service maintains a replica of its respective monolith table, it ensures that the microservices always have access to the latest, consistent data.

- Performance: Each service can choose the database type that best fits its needs, improving performance and efficiency.

- Fault Isolation: If one service's database encounters issues, it doesn't affect the operation of other services.

Thus, the 'Database per Service' pattern is an essential part of our micro-services migration strategy, contributing to service autonomy, reducing inter-service dependencies, and enabling a smooth transition from the monolithic architecture.

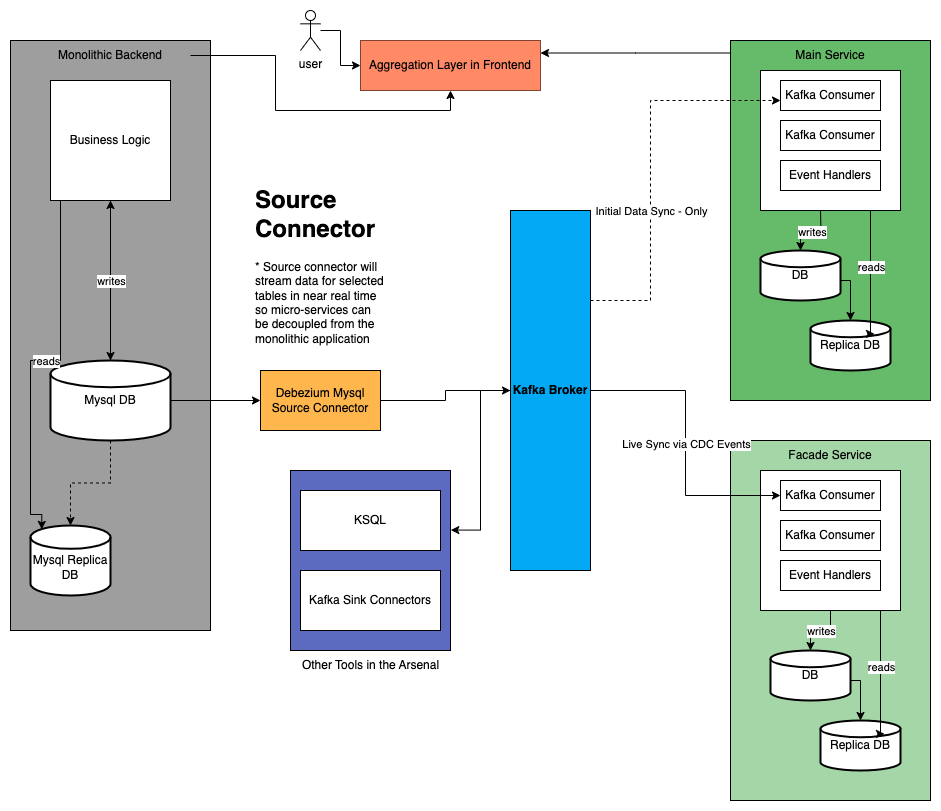

Use of Event-Driven Architecture

Central to our architectural approach is the principle of an Event-Driven Architecture (EDA). In an EDA, the flow of the program is determined by events such as user actions, sensor outputs, or messages from other programs.

Use of Event-Driven Architecture, Debezium, CDC, Kafka

Use of Event-Driven Architecture, Debezium, CDC, Kafka

In the context of our migration strategy, Debezium and Kafka form the backbone of our EDA. Debezium, with its Change Data Capture functionality, triggers events based on changes in our database, such as insertions, updates, or deletions in the database tables. These changes are converted into events, which are then relayed to Kafka.

Kafka serves as a distributed, fault-tolerant event log that maintains and transmits these events as a continuous stream. This forms an event backbone, providing a resilient, reliable pipeline through which data changes flow in real-time.

Each of our microservices is designed to listen to and consume these events from Kafka. But unlike a traditional database-driven application, where services might periodically query a database for updates, an event-driven microservice reacts to changes as they happen in real-time. This ability to react and adapt to data changes in real-time is the essence of an EDA.

Each microservice is a self-contained unit that operates independently within its own domain, and only reacts to events that are relevant to its function. This setup ensures a high degree of decoupling between microservices, thereby enhancing their fault tolerance and scalability.

For instance, our User service might only listen to changes in the 'User' table, while the Vendor service is tuned to events from the 'Vendor' table. This ensures that services are not inundated with irrelevant information, reducing noise and enhancing efficiency.

In essence, EDA enables our microservices to stay updated with the latest data, process it, and possibly generate further events, all in real-time. This ensures seamless, real-time data exchange between our services and provides a robust, scalable backbone to our evolving system.

Future Phases: Scaling and Refining Services

As we advance along our migration journey, the landscape of our microservice architecture continues to expand. More Facade and Main Services will be incrementally introduced, each catering to a unique domain or functionality within our application.

In subsequent phases, we will roll out new Facade Services corresponding to additional core tables in the monolith's database. Each new Facade Service mirrors a different slice of the monolithic database, liberating these data and enabling them to function with heightened autonomy. These data sets will then be more readily available to the constellation of microservices that make up our evolving architecture.

Simultaneously, we will be enriching our system with an increasing number of Main Services. These will either introduce new functionalities or assume responsibility for more complex tasks that the monolith had previously handled. Each Main Service will be custom-built to manage specific functions or related groupings of tasks, subscribing to events from one or more Facade Services to stay updated with the freshest data.

This phased rollout is designed to foster organic scaling. Each new service is integrated and refined incrementally to ensure system balance during the transition. It enables us to manage complexity effectively, allowing thorough testing and optimization of each service before the next is introduced.

Importantly, this design inherently supports our product development roadmap. Since new features are encapsulated in their services, they can be developed, tested, and deployed independently of others. This means that the introduction of new features or changes in the existing ones can be managed with minimal impact on the overall system. It also allows us to maintain a consistent pace of feature development, as we don't have to put our entire system on hold to accommodate new features or changes.

The modular approach also brings benefits of independent scalability and deployability. Each service can be scaled, deployed, or updated independently without affecting other parts of the system. This flexibility is critical in modern software development, where agility and responsiveness to change are key.

Ultimately, what emerges is not merely a collection of individual services, but a harmonious, interconnected ecosystem of microservices, each performing its role, yet collectively propelling the whole system forward.

Conclusion

Migrating from a monolithic architecture to microservices is a challenging yet rewarding process. By leveraging the power of Debezium, Kafka, and well-defined service boundaries, we can ensure a smooth transition with minimal disruptions to our current operations. This journey not only improves our system's scalability and reliability but also opens doors to new features and enhancements that might have been difficult to implement in a monolithic setup.